Kyurae Kim

I am a fourth year PhD student advised by Professor Jacob R. Gardner at the University of Pennsylvania working on Bayesian inference, stochastic optimization, Markov chain Monte Carlo sampling, and Bayesian optimization. I collaborate with Professor Yi-An Ma, Alain Oliviero Durmus, and Trevor Campbell.

I acquired my Bachelor in Engineering degree at Sogang University, South Korea, during which I did undergraduate research under Professor Hongseok Kim, Tai-kyong Song, Sungyong Park, and Youngjae Kim. During this time, I also worked at Samsung Medical Center, South Korea, as an undergraduate researcher, at Kangbuk Samsung Hospital, South Korea, as a visiting researcher, and at Hansono, South Korea, as a part-time embedded software engineer. After graduating, I was a research associate at the University of Liverpool under Professor Simon Maskell and Jason F. Ralph. I hold memberships in the ACM, ISBA, and the IEEE (which implies that I’m a good tipper…)

Previously, I used to work on medical imaging, computer systems, high-performance computing, and array signal processing, but I’m also broadly interested in topics such as computational statistics, programming languages, and optimization. Here is a list of papers that I found interesting over my career.

Software

I am active within Julia’s computational statistics community as part of the Turing language team.

- AdvancedVI.jl: Variational inference algorithms in Julia.

- SliceSampling.jl: Slice sampling MCMC algorithms in Julia.

- MCMCTesting.jl: Correctness tests for MCMC algorithms in Julia.

productivity tools that I use

(Last updated in 3 February 2025)

I heavily use cross-platform opensource software tools.

-

Emacs (heavily customized) for writing code

- Magit for accessing Git within Emacs

- Zotero for managing citations and exporting bibtex

- Inkscape for drawing vector diagrams (but also Tikz if not in a rush)

- Veusz for quick publication quality plots (but also PGFplots, Makie.jl for fancier stuff).

- Google Scholar PDF Reader and Evince for viewing and Foxit Reader for editing, and annotating PDF files.

- Nomacs for viewing a lot of image files quickly (on Windows, FastStone is hard to beat)

- Flameshot for taking screenshots

news

| Sep 18, 2025 | 1 paper on BBVI and 1 paper on BayesOpt have been accepted to NeurIPS’25 |

|---|---|

| May 1, 2025 | Our paper on tuning SMC samplers have been accepted to ICML’25. |

| Oct 26, 2024 | I will be back in South Korea from December 6 to December 25. |

| Sep 25, 2024 | 1 paper on BayesOpt has been accepted to NeurIPS’24 as spotlight |

| Aug 26, 2024 | I will be in San Francisco from August to November. |

selected publications

-

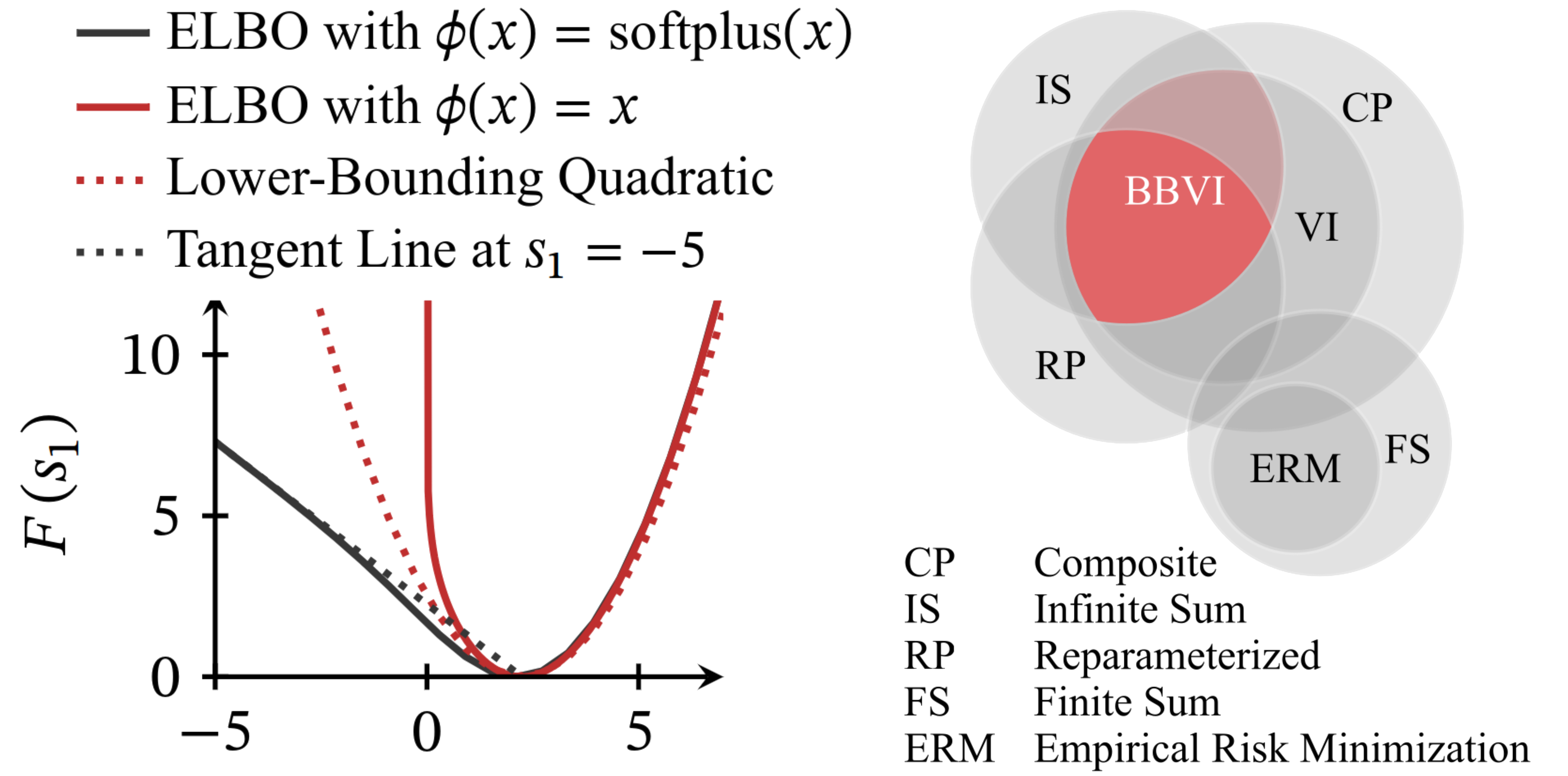

Nearly Dimension-Independent Convergence of Mean-Field Black-Box Variational InferenceIn Advances in Neural Information Processing Systems Dec 2025

-

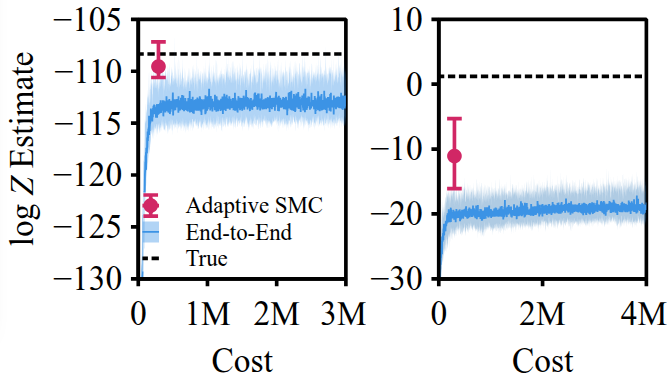

Tuning Sequential Monte Carlo Samplers via Greedy Incremental Divergence MinimizationIn Proceedings of the International Conference on Machine Learning Jul 2025

Tuning Sequential Monte Carlo Samplers via Greedy Incremental Divergence MinimizationIn Proceedings of the International Conference on Machine Learning Jul 2025 -

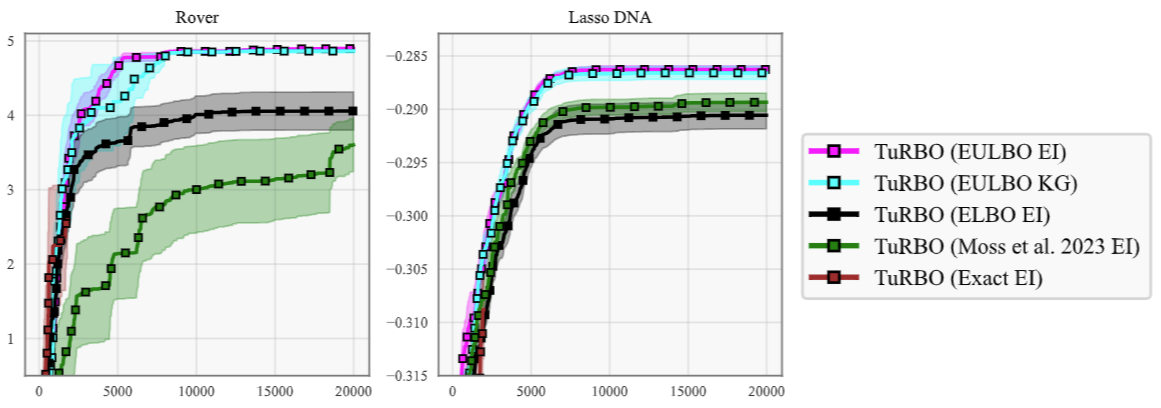

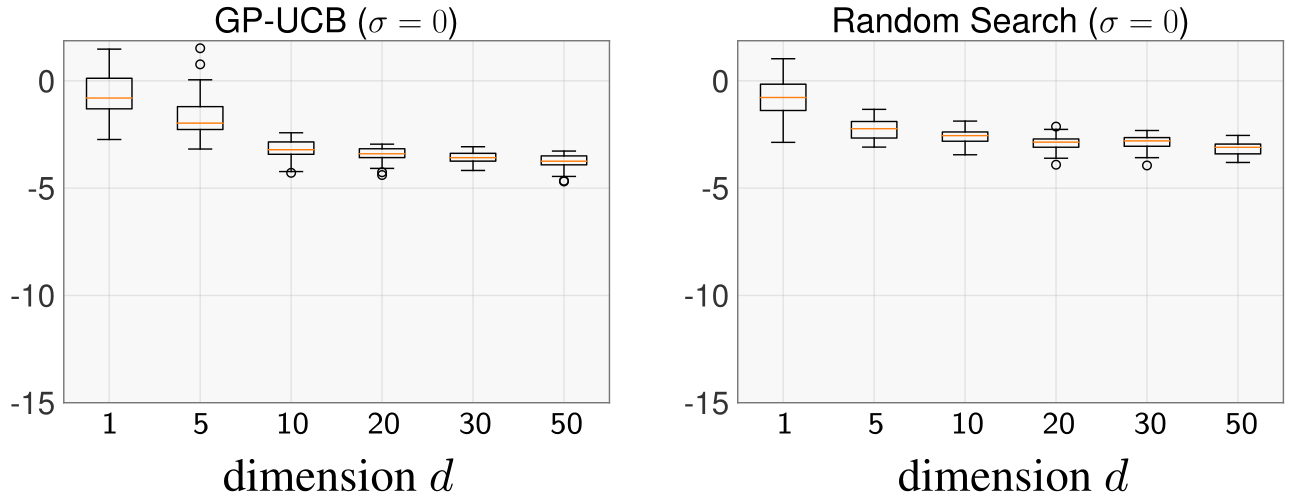

Approximation-Aware Bayesian OptimizationIn Advances in Neural Information Processing Systems Dec 2024

Approximation-Aware Bayesian OptimizationIn Advances in Neural Information Processing Systems Dec 2024 -

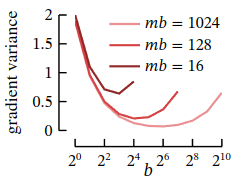

Demystifying SGD with Doubly Stochastic GradientsIn Proceedings of the International Conference on Machine Learning (ICML) Jul 2024

Demystifying SGD with Doubly Stochastic GradientsIn Proceedings of the International Conference on Machine Learning (ICML) Jul 2024 -

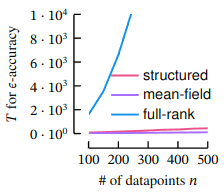

Provably Scalable Black-Box Variational Inference with Structured Variational FamiliesIn Proceedings of the International Conference on Machine Learning (ICML) Jul 2024

Provably Scalable Black-Box Variational Inference with Structured Variational FamiliesIn Proceedings of the International Conference on Machine Learning (ICML) Jul 2024 -

Stochastic Approximation with Biased MCMC for Expectation-MaximizationIn Proceedings of the International Conference on Artificial Intelligence and Machine Learning (AISTATS) May 2024

Stochastic Approximation with Biased MCMC for Expectation-MaximizationIn Proceedings of the International Conference on Artificial Intelligence and Machine Learning (AISTATS) May 2024 -

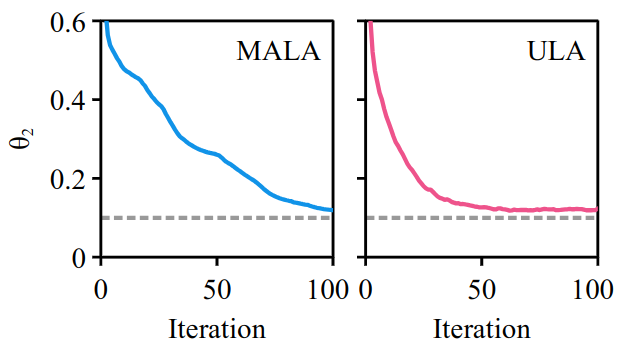

Linear Convergence of Black-Box Variational Inference: Should We Stick the Landing?In Proceedings of the International Conference on Artificial Intelligence and Machine Learning (AISTATS) May 2024

-

On the Convergence of Black-Box Variational InferenceIn Advances in Neural Information Processing Systems Dec 2023

On the Convergence of Black-Box Variational InferenceIn Advances in Neural Information Processing Systems Dec 2023 -

The Behavior and Convergence of Local Bayesian OptimizationIn Advances in Neural Information Processing Systems Dec 2023

The Behavior and Convergence of Local Bayesian OptimizationIn Advances in Neural Information Processing Systems Dec 2023